I Am Graphics And So Can You :: Part 6 :: Pipelines

I'm here to lay some pipelines. *Derp*

It was Vulkan pipelines that really made things click for me. Everything seemed so disassociated before. I knew what shaders did, I knew what data the GPU needed, and I knew what the individual pipeline functions were supposed to do. But I had so many questions. When was it ok to do X? When should I call Y function? Was this the GPU's responsibility or mine? Then I saw VkGraphicsPipelineCreateInfo and did a triple take. Who in their right mind would ever want to touch that thing? And then I heard how people were front loading pipeline compiles so they weren't happening intra frame. And then it dawned on me. It all fell into place. I started to see graphics as a cohesive process instead of a functional free-for-all. And now I wanted to touch VkGraphicsPipelineCreateInfo.

Over the series I've taken you on a grand tour of Vulkan, but I've always shied away from going into idRenderProgManager. This was for a very good reason. Pipelines are at the core of what Vulkan and graphics are all about. They tell the GPU what to do with draw commands from the beginning to the end. In Part 5 if you remember, there was a renderProgManager.CommitCurrent call right before the draw. This is because we're committing the final pipeline state that will inform the GPU how to draw what is being submitted in the subsequent draw call.

Let's start back at the beginning and catch up to present. Initialize()!

Fortunately DOOM 3 BFG only has a handful of shaders, so it's easy to just manually build them instead of relying on a complex system. FindShader will attempt to load a shader if not found. It's an API agnostic call, but let's look at the Vulkan implementation.

Cool, not really anything complicated going on here. I'm not going to stop and talk about SPIRV really. Some of you will know what I'm talking about, and some may not. SPIRV is Vulkan's shader language. It's actually quite nice to work with compared to other APIs. Instead of uploading a text buffer at run time you instead precompile the shader module outside the application and upload that binary.

Let's bounce back to the next Vulkan routine from Init; CreateDescriptorSetLayout. Let's think about this... Descriptor... Set ... Layout. The name is pretty indicative of its purpose. It's a layout of descriptor sets. So it's a collection of descriptors, but of what? Well if you remember from Part 2, I showed you the graphics pipeline from beginning to end. Some of those stages are programmable ( you upload shaders for them ) and some are fixed function ( you just toggle some bits for them ). Now shaders aren't very interesting without some input to work on. Yes the vertex shader receives, well vertices. And the fragment shader receivers *drumroll* fragments. But what if we want to use textures, uniform buffers, or other data? Well we have to describe a location where that data can be bound for the shader to use; hence descriptor set layout. Think of it as a way of interfacing your resources to your shader once on the GPU.

Let's look at CreateDescriptorSetLayout now.

So basically we just take the bindings we loaded for each shader associated with this renderprog and build a list of types paired with indices. "But why not restart the index at zero for the fragment shader?" you might ask. Well remember that shaders are just part of a pipeline. They take inputs, do some processing, and then forward this onto the next stage. Their output isn't a result unto itself. To this end we are describing every part of the pipeline where a resource can be bound, over the shaders we supplied. Let's see what we can bind at these locations ( Note VkNeo only uses 2x types ). Note all prefixed with VK_DESCRIPTOR_TYPE_.

- SAMPLER - Can be used to sample images.

- COMBINED_IMAGE_SAMPLER - Combines a sampler and associated image. (VkNeo)

- SAMPLED_IMAGE - Used in conjunction with a SAMPLER.

- STORAGE_IMAGE - Read/write image memory.

- UNIFORM_TEXEL_BUFFER - Read only array of homogeneous data.

- STORAGE_TEXEL_BUFFER - Read/write array of homogeneous data.

- UNIFORM_BUFFER - Read only structured buffer. (VkNeo)

- STORAGE_BUFFER - Read/write structured buffer.

- UNIFORM_BUFFER_DYNAMIC - Same as UNIFORM_BUFFER, but better for storing multiple objects and simply offsetting into them.

- STORAGE_BUFFER_DYNAMIC - Same as STORAGE_BUFFER, but better for storing multiple objects and simply offsetting into them.

- INPUT_ATTACHMENT - Used for unfiltered image views.

Let's take a break and look at the whole pipeline again.

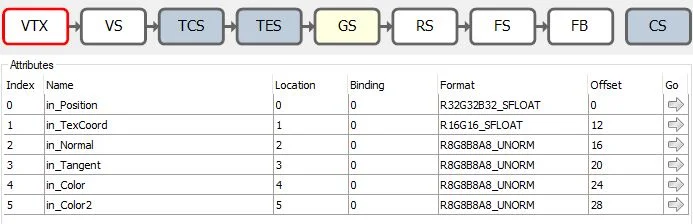

So far we've covered the Vertex Shader and Fragment Shader stages. The other programmable stages, tessellation, geometry, and compute are not used in VkNeo. Let's return to idRenderProgManager::Init and look at setting up the Vertex Input stage of the pipeline as accomplished through CreateVertexDescriptions.

"Oh Vulkan's a lot of code." Yes, but it's mostly this. You'll fill out those structs and you'll like it! So DOOM 3 BFG has only three vertex layouts [ DRAW_VERT, DRAW_SHADOW_VERT, DRAW_SHADOW_VERT_SKINNED ]. DRAW_VERT is the most complex and often used, so we'll only go over that one ( others excluded above for brevity ). Essentially this just describes the vertex data we'll be supplying to the pipeline. DRAW_VERT is 32 bytes and is laid out as such.

Remember in Part 4.5 we talked about the job of the front end vs backend? Well the frontend loads up the vertexCache and the backend draws what's in the vertexCache; simple as that. Before we get into next steps, let's look at at least one shader example. ( gui.vert and gui.frag which are used to draw UI elements )

#version 450

#pragma shader_stage( vertex )

#extension GL_ARB_separate_shader_objects : enable

layout( binding = 0 ) uniform UBO {

vec4 rpMVPmatrixX;

vec4 rpMVPmatrixY;

vec4 rpMVPmatrixZ;

vec4 rpMVPmatrixW;

};

layout( location = 0 ) in vec3 in_Position;

layout( location = 1 ) in vec2 in_TexCoord;

layout( location = 2 ) in vec4 in_Normal;

layout( location = 3 ) in vec4 in_Tangent;

layout( location = 4 ) in vec4 in_Color;

layout( location = 5 ) in vec4 in_Color2;

layout( location = 0 ) out vec2 out_TexCoord0;

layout( location = 1 ) out vec4 out_TexCoord1;

layout( location = 2 ) out vec4 out_Color;

void main() {

vec4 position = vec4( in_Position, 1.0 );

gl_Position.x = dot( position, rpMVPmatrixX );

gl_Position.y = dot( position, rpMVPmatrixY );

gl_Position.z = dot( position, rpMVPmatrixZ );

gl_Position.w = dot( position, rpMVPmatrixW );

out_TexCoord0.xy = in_TexCoord.xy;

out_TexCoord1 = ( in_Color2 * 2 ) - 1;

out_Color = in_Color;

}

The first thing we run into is layout( binding = 0 ) uniform UBO. This is taken care of in CreateDescriptorSetLayout, and the UBO is bound upon committing for a draw call. layout( location = n ) in describes the vertex input parameters setup in CreateVertexDescriptions. The layout( location = n ) out parameters are what get fed to the next programmable stage of the pipeline, the fragment shader.

#version 450

#pragma shader_stage( fragment )

#extension GL_ARB_separate_shader_objects : enable

layout( binding = 1 ) uniform sampler2D samp0;

layout( location = 0 ) in vec2 in_TexCoord0;

layout( location = 1 ) in vec4 in_TexCoord1;

layout( location = 2 ) in vec4 in_Color;

layout( location = 0 ) out vec4 out_Color;

void main() {

vec4 color = ( texture( samp0, in_TexCoord0.xy ) * in_Color ) + in_TexCoord1;

out_Color.xyz = color.xyz * color.w;

out_Color.w = color.w ;

}

See how the outputs from the vertex shader feed into the fragment one? This again reinforces the reality that all these stages are part of a pipeline for processing data. The final out_Color variable is what gets applied to the render target ( in VkNeo this will be our swap chain image we acquired earlier ).

The last Vulkan thing we'll look at for idRenderProgManager::Init is CreateDescriptorPools. The reason for pooling descriptor sets will become clear once we look at CommitCurrent, so just stick with me.

This is all fairly straightforward. Again once we get to CommitCurrent, we'll talk about how descriptor sets are used and why we need to pool them. ( similar to why you'd pool anything really )

Final Boss

It finally comes to it. This is probably what I would consider the core of Vulkandom. This is where graphics pipelines get made and used. I hope by now in the series it has really sunk in what a pipeline is and why I keep referring to it in terms of graphics. If not this next part will be brutal. But if you've been paying attention, then you can ride the waves and come out as the sea's master. Let's do this.

Holy frijoles that was a lot of code. But wait there's more! There's a second stage to this boss battle. Be not dismayed. Let's recap what's going on here. ( And please note the last comment in the code block )

- Get a pipeline satisfying the GL_State bits set in the higher level renderer.

- Allocate a descriptor set that matches the layout for the renderProg_t. We pool them because we need a descSet for each draw command.

- Grab the shader UBOs that match the layout.

- Loop over all the layout's binding points and properly describe and set the resource(s).

- Submit the descriptor set writes to the device.

- Bind descriptor set

- Bind pipeline.

Now for GetPipeline. I'll skip that actual function because it mainly checks if the state bits match and if the optional separate ( FRONT/BACK )stencil operations match. If it fails to find a pipeline it will instead create one. Time for stage two.

Sit on this for a while. Look at the renderdoc pipeline diagram. Look at the code. Look at the diagram again. Look at the code. As questions arise, look up answers ( ask me ). Read up on what each does section or function does. It will take time for everything to sink in. But before your eyes lies bare the whole graphics pipeline in a nutshell. You just need to understand how to plug all the bits in.

A graphics programmer's work is never done, but you my friend have made it. You've tackled every major component of Vulkan that VkNeo uses. Essentially if you understand this series, then you know how to write a Vulkan renderer for an existing game. There are small bits and pieces that we could ( and will ) cover in future posts. But like the Millennium Falcon flying through the Death Star, we ripped through Vulkan on this whirl wind tour of the API. So grab yourself some shawarma, you deserve it.

P.S. I will have a wrapup post to sow everything together. I'll also be making future posts as I open source VkNeo. For now though, bask in the glory that you crossed the finish line. Now go out there and graphics or vulkan or something.

Cheers