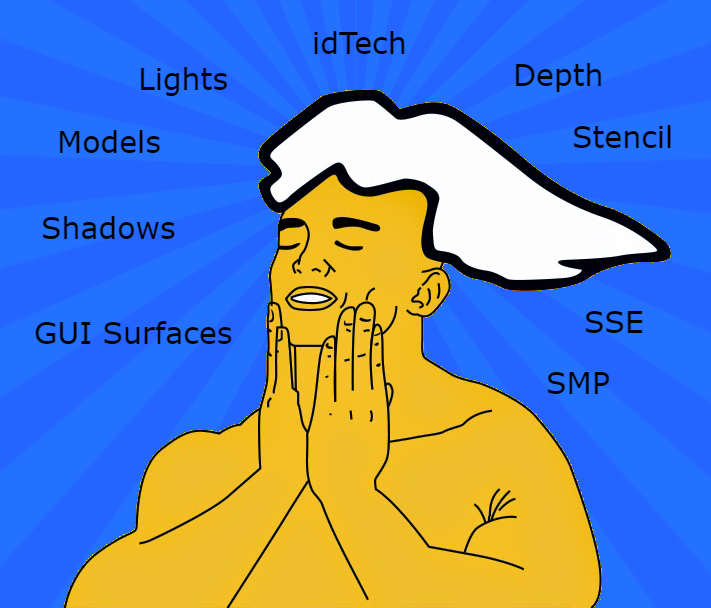

I Am Graphics And So Can You :: Part 4.5 :: idTech

We can all relate

AN ASIDE :: I AM GRAPHICS AND SO CAN YOU :: MOTIVATION & EFFORT

PART 5 :: I AM GRAPHICS AND SO CAN YOU :: YOUR PIXELS ARE SERVED

As mentioned in my "Motivation & Effort" post, idTech is a lot of code and the rendering APIs are only a component of that ( a very important component though ). All this rendering code can make little sense without some context on how it's being used. So let's see how exactly idTech goes about invoking the magic.

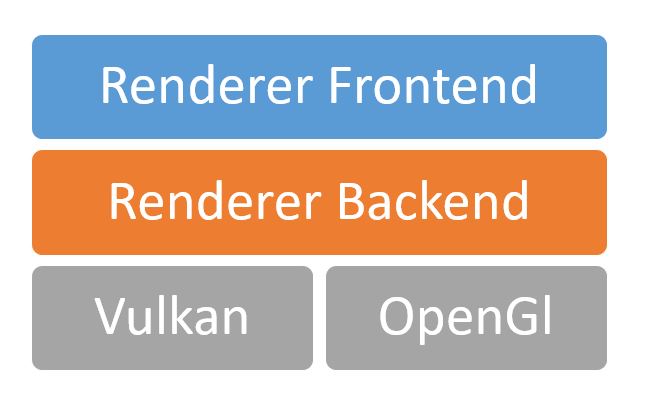

idTech4.x maintains the idea of both a frontend and backend renderer. Most of everything we've covered so far, and will cover going forward deals with the backend. The two roles could be summed up as this.

- Frontend - Determine what's visible to draw.

- Backend - Draw what the frontend tells us is visible.

Here's a run down of the typical frontend callstack.

The frontend actually builds a list of render commands which it then passes as a linked list to the backend's ExecuteBackendCommands. When I say render commands, it's nothing glorious. These are high level directives. Here all all the commands.

- RC_NOP - Do nothing

- RC_DRAW_VIEW_3D

- RC_DRAW_VIEW

- RC_COPY_RENDER

- RC_POST_PROCESS

Frankly, everything but DrawView is fairly trivial. But now we're starting to see GL_* calls. Anytime you see this in idTech, it signifies a wrapper for some graphics API functionality. So we're close to Vulkan! Before we get ahead of ourselves though, let's further cement the relationship between the frontend and backend.

That's it! That's the separation. One of the big efforts in VkNeo was actually to corral all the OpenGL code into something with an API contract that could be suitable to implementing Vulkan ( Without losing existing functionality. ) This resulted in the idRenderBackend where there's a clear handoff of the data and responsibility.

Now let's look at that DrawView call shall we? Buckle up, the rabbit hole gets a bit deep. Note that this is idTech4.5 code with some slight modifications for VkNeo. You can already find the full OpenGL only source on GitHub.

DrawView

This particular call accounts for ~99% of what you see in DOOM 3 BFG. The source has been distilled down for brevity, while capturing the core functionality.

So let's break down what this function is doing at a high level.

Establish Viewport

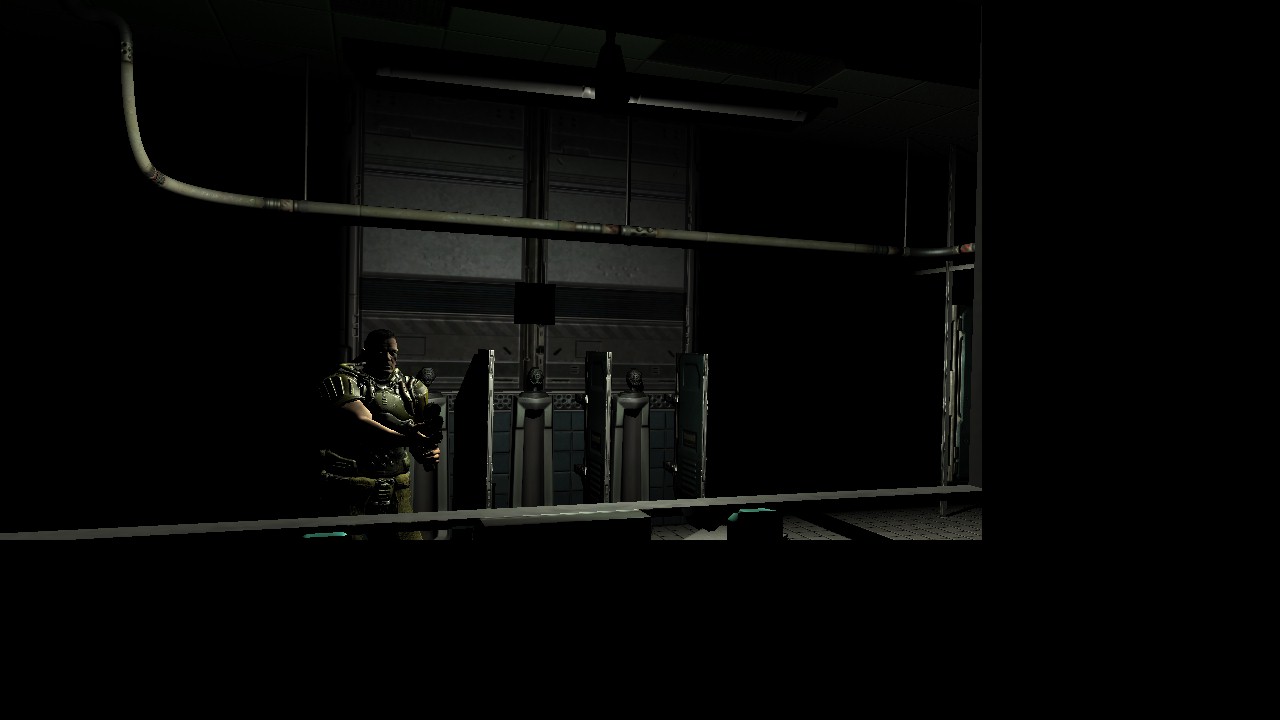

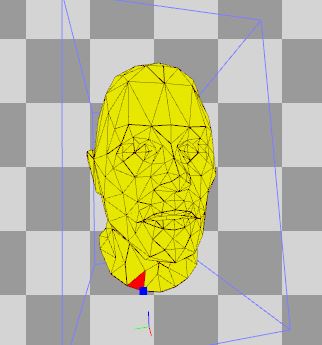

Basically the rectangle the view is being rendered into. This is your game window or full screen display. Let's look at our screen ( shot ). We'll be looking at the man in the mirror today.

Click to Enlarge

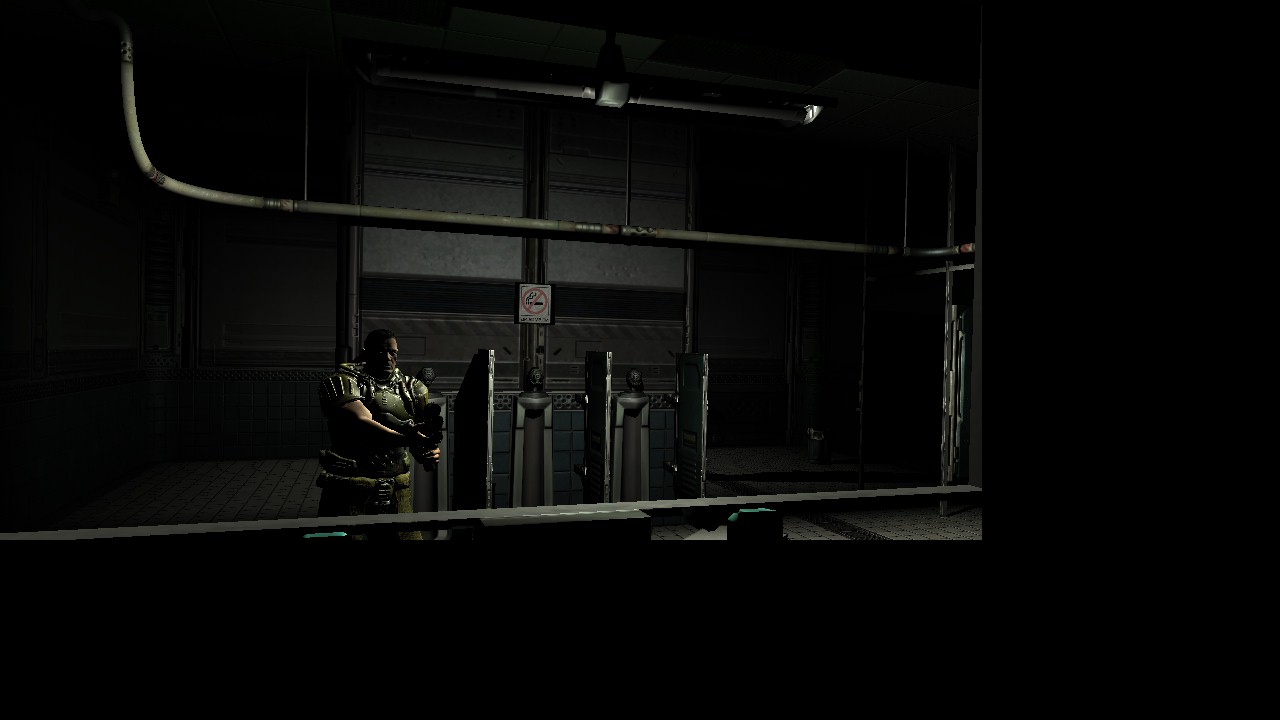

Setup Scissor

Just like cutting paper for a craft, the renderer can cut out a specific part of the viewport to render to. All operations outside this region are disregarded. In our case, mirrors are implemented via sub views. ( DrawView gets called for what we see in the mirror, and DrawView gets called for player's view of the mirror. ) The GL_Scissor for the subview looks like this.

Click to Enlarge

GL_State

This toggles all the switches and turns all the knobs fed to it. This is probably the most contentious part between Vulkan and OpenGL for VkNeo. There are 64 bits of state ( uint64 ) in total telling the renderer to do different things. These bits are renderer agnostic. ( Item [ bits ] )

- Src & Dst Blend Functions [ 0 - 5 ]

- Depth Mask [ 6 ]

- Color Masks [ 7 - 10 ]

- Polygon Mode [ 11 ]

- Polygon Offset [ 12 ]

- Depth Functions [ 13 - 14 ]

- Cull Mode [ 15 - 16 ]

- Blend Op [ 17 - 19 ]

- Stencil State [ 20 - 47 ]

- Alpha Test State [ 48 - 57 ] - No longer used

- Depth Test Mask [ 58 ]

- Front Face [ 59 ]

- Separate Stencil [ 60 ]

- Mirror View [ 61 ]

Ok, I lied, Neo doesn't used all 64 bits. But it is getting crowded in there. Each of these items can be a separate post, but for now it's safe to just know they're all wrapped up in a nice little GL_State function that idTech4.5 uses.

GL_Clear

"My first" rendering operation.

RenderParms

Next we run into setting some render parms. Anytime you see RENDERPARM_ you can bank on this showing up in a shader's UBO ( uniform buffer ). Essentially whenever a draw is called in idTech4.5 renderparms relevant to the shader(s) being used are scooped up into a uniform buffer the shader(s) can use. For more information on UBOs, see Part 4.

FillDepthBufferFast

I mean everything is better fast right? Well some things. Anyways, yes depth buffers. To people with prior graphics experience this is old news. To someone new to graphics, depth buffers can be something hard to grasp. But I think often that's because they're not introduced to it in an intuitive way. Most people think of images as 2D flat surfaces. But in rendering, you need to cast aside those assumptions. Let's start with the basics, 0 to 1.

If you remember in Part 3 CreateRenderTargets we setup a depth image with VK_FORMAT_D32_SFLOAT_S8_UINT. What this means is that depth and stencil share the same image, but we can ignore stencil for now. The depth part is composed of 32 bits encoded as a signed float. These 32 bits are stretched over the values of zero-to-one. Now, we're going to switch contexts on how we think of this image a couple times.

First let's think of it as color. What is 0? In graphics it's black. It's the absence of any color. What is 1? It's white as the color channel(s) are fully saturated. This is actually how physics works as well. Things appear black because little to no light is reaching our eyes from that object, whereas white is a large mixture of different frequencies.

But we're not trying to represent color data, we're trying to represent depth data. ie how far away is something? We use the 0-1 range to encode this distance. ( See images can be more than just things to look at. ) Let's walk through a few draw calls and look at what FillDepthBufferFast produces for us. ( We'll start with final image then first and work back to final. Keep Clicking!!! )

Cool huh? But what value is it? Well think of it in natural terms? If you can't see the zombie behind the bathroom wall, does it exist? ( Not talking Copenhagen Interpretation or anything ). What I mean is, does it or should it exist to the renderer? Why waste time on drawing it, if the player can't see it. This is what depth helps you determine. Let's look at one final example to further cement things. I'll use a simpler depth image to demonstrate ( this time of the actual player looking at the mirror. ).

If we took just this slice of what was visible and shot rays at it, how far would they reach? It would look something like this graph.

Anything in the white space of the graph essentially wouldn't be rendered. See, not so magical after all right? Any concept, once you dig deep down, has a simple truth. The hardest thing to learn often is "how" to think about things; not the "what".

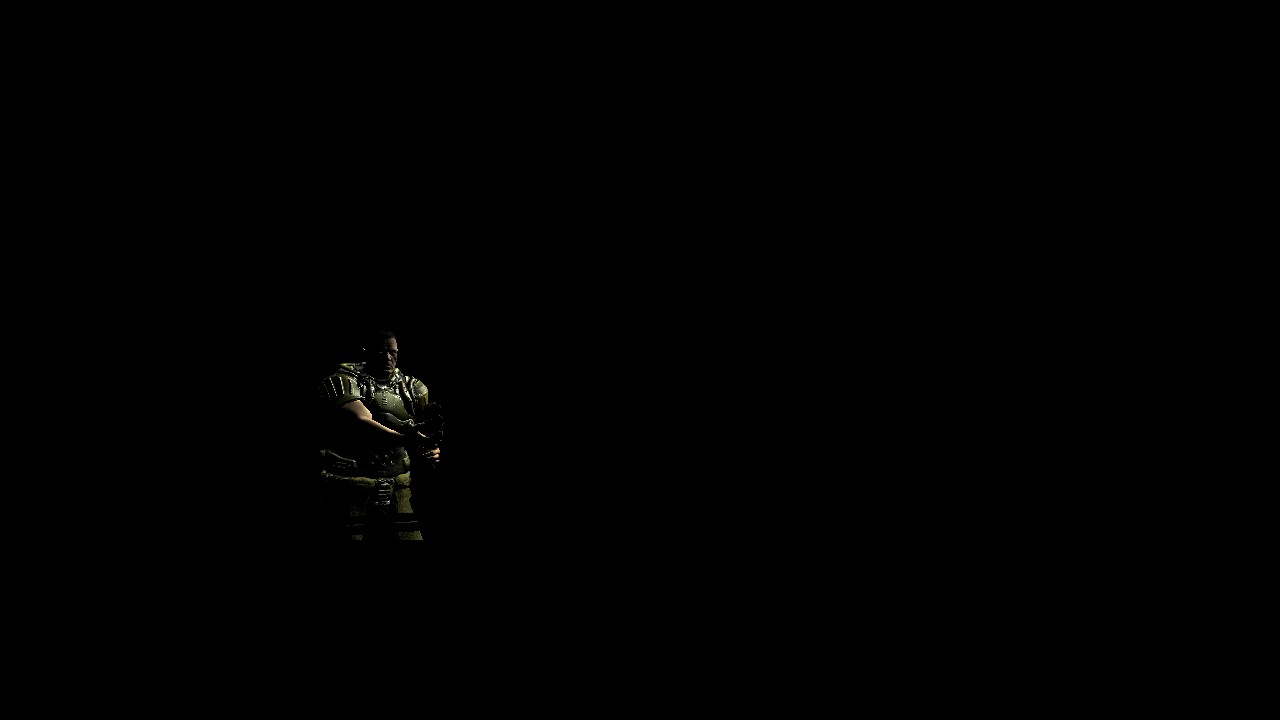

DrawInteractions

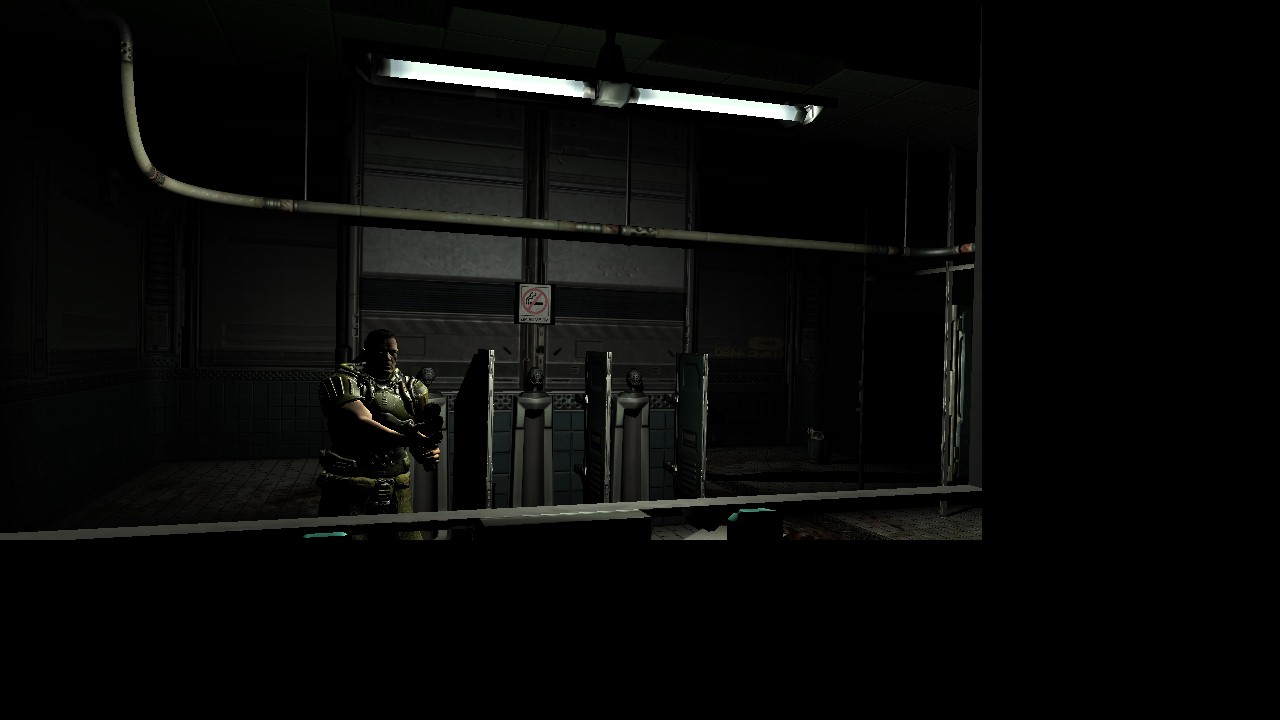

So right now our color buffer looks like this.

Not very pretty right? This is where we start introducing light into the scene. DrawInteractions goes over every light that touches ANYTHING visible in the scene and checks them for performing a series of actions.

- Global Light Shadows -> StencilShadowPass

- Local Light Interactions -> RenderInteractions

- Local Light Shadows -> StencilShadowPass

- Global Light Interactions -> RenderInteractions

- Translucent Interactions -> RenderInteractions

I'll touch on stencil shadows at some other point or we'll just be here all night. People have a natural intuition of how light reveals things. So let's see how the scene gets rendered in steps. Click through the following gallery. Note remember that this is all driven by lights in the scene. Nothing is drawn unless it is being lit.

DrawShaderPasses

Last but not least, we put on the final polish. These draws are not related to the lighting environment so the shader passes happen outside of DrawInteractions. Click through this gallery to see that coat of paint go on.

And that's it! With these 3x procedures we've produced > 90% of what people would consider the visible game. But, But, But.... you might be wondering why I spent so much time the depth buffer section and not the color ones. Very astute observation Watson!

"You know my method. It is founded upon the observation of trifles."

Regardless of how splendid the end result, the core functionality producing it can be and is rather quite simple ( elementary one might say ). Often the complexity is in the data, not necessarily the code. This is another major deterrent to rendering for some people. It's not the API, or the systems, or architecture, or hardware. It's the components of data and how they relate to one another. That sea of entropy has swallowed many a soul. But you can weather the storm with a prepared mind. Remember the difficulty isn't the facts, but the way in which you think about them.

So let's think about what's going on. How was this scene produced? There are a lot of directions we could take this; going down all sorts of rabbit holes. But we're here to learn Vulkan remember? ( oh yeah that. And I'd have to start charging tuition to cover everything else ) Essentially it comes down to collecting enough details to submit for a draw call. This includes things such as...

- GLState bits mentioned before.

- Surfaces -> vertices to draw

- Lights affecting the surface

- Textures associated with the draw

- Render parms associated with the draw.

That can seem like an impossible symphony to orchestrate. But wait, that's the whole reason I started off this series about Inuition in Part 2. Each draw call just goes down the graphical pipeline and emerges out the other side as a series of pixels. So the details we collect inform the pipeline how to behave. ( I go through each stage of the pipeline in Part 2 from a high level so I won't reiterate that here ). So before we go, let's look at one more thing that demonstrates all of this. Then in the next article we'll take the plunge into drawing with Vulkan.

We'll look at DrawSingleInteraction. This is just a few steps down the trail from DrawInteractions we looked at above. In it you'll notice what I'm talking about regarding gathering data. The frontend's job is to take the representation of the world, and break it into a collection of surfaces. The backend then looks at these surfaces, the associated entities, and the lights in view and just steps through each surface 1-by-1 calling draw on it.

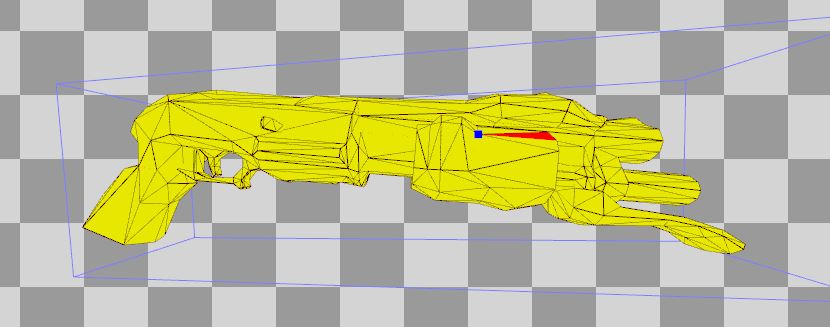

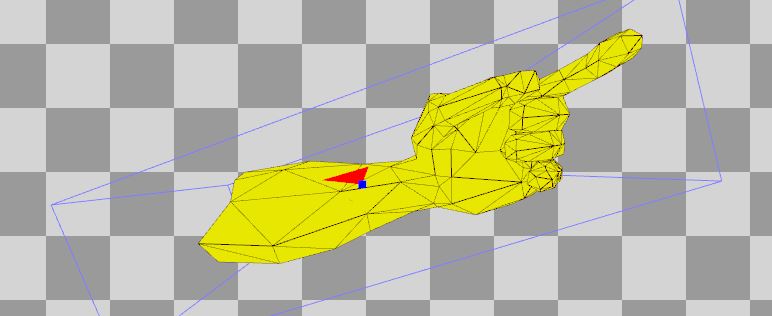

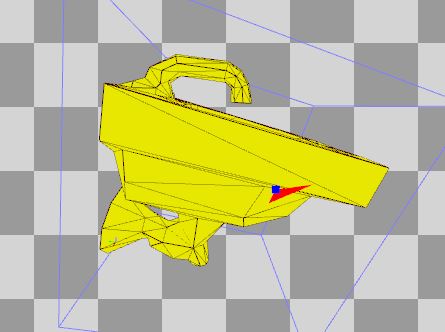

DrawElementsWithCounters( surf ) is where this train stops. But what is a surface you might ask? Here are a few examples. ( click, click, click, click, )

See not so unfamiliar after all? It can all seem alien, but it's just a familiar face with a different guise.